AI to ROI: Data Readiness in a brave new world

Turning Pipe Dreams into Actionable Plans for AI Success

The opening keynote of Gartners’ IT Symposium kicked off with a bang. Bestowing the exclusive circle of Tech & IT execs with a deep dive into the wonderful world of AI.

A wave of heightened energy stepped through the crowd! Gone were the struggles of past year. 2024 was shaping up to be a year of profound transformation, powered by the modern-day alchemy of AI.

Despite the backdrop of economic hurdles and geopolitical turbulence, AI has ignited a resurgence in next year’s IT budgets.

It’s a no-brainer! By 2026, 80% of companies will have incorporated AI into the core of their business. “Neglecting to do so would be a dumb-ass mistake.”

In true Jobsian fashion, Gartner unveiled a ‘One more thing’ moment. The projector’s beam flashed, capturing every gaze as it revealed:

‘Only 4% of companies have AI-ready data, and 96% are not ready.’

The room’s energy flipped instantly. Restlessness took hold as many shifted uneasily in their seats, while others found solace in the soft glow of their smartphones.

The naked truth stung like a hornet’s jab.

Only a small minority, let’s say about 4% of the crowd reclined with boss-like confidence, smirking with self-satisfaction.

They’d faced an uphill battle, quietly laying the groundwork uncelebrated — enforcing GDPR compliance, embedding stringent data quality measures, and courageously securing funds for essential data management, all amid concerns for their professional tenure.

But finally, their hard work paid dividends!

Wishful thinking is not a strategy

Leveraging the business value of your data is a long game! It’s doing a whole lot of unsexy projects, with questionable immediate value. Aiming for future rewards.

It’s forging a path toward building integrated capabilities that bring competitive differentiation. A path embarked upon by many, yet finished by few.

The hard truth is, that more than 70% of companies are after quick wins, building unrealistic dreams inspired by a social media culture that normalises immediate gratification.

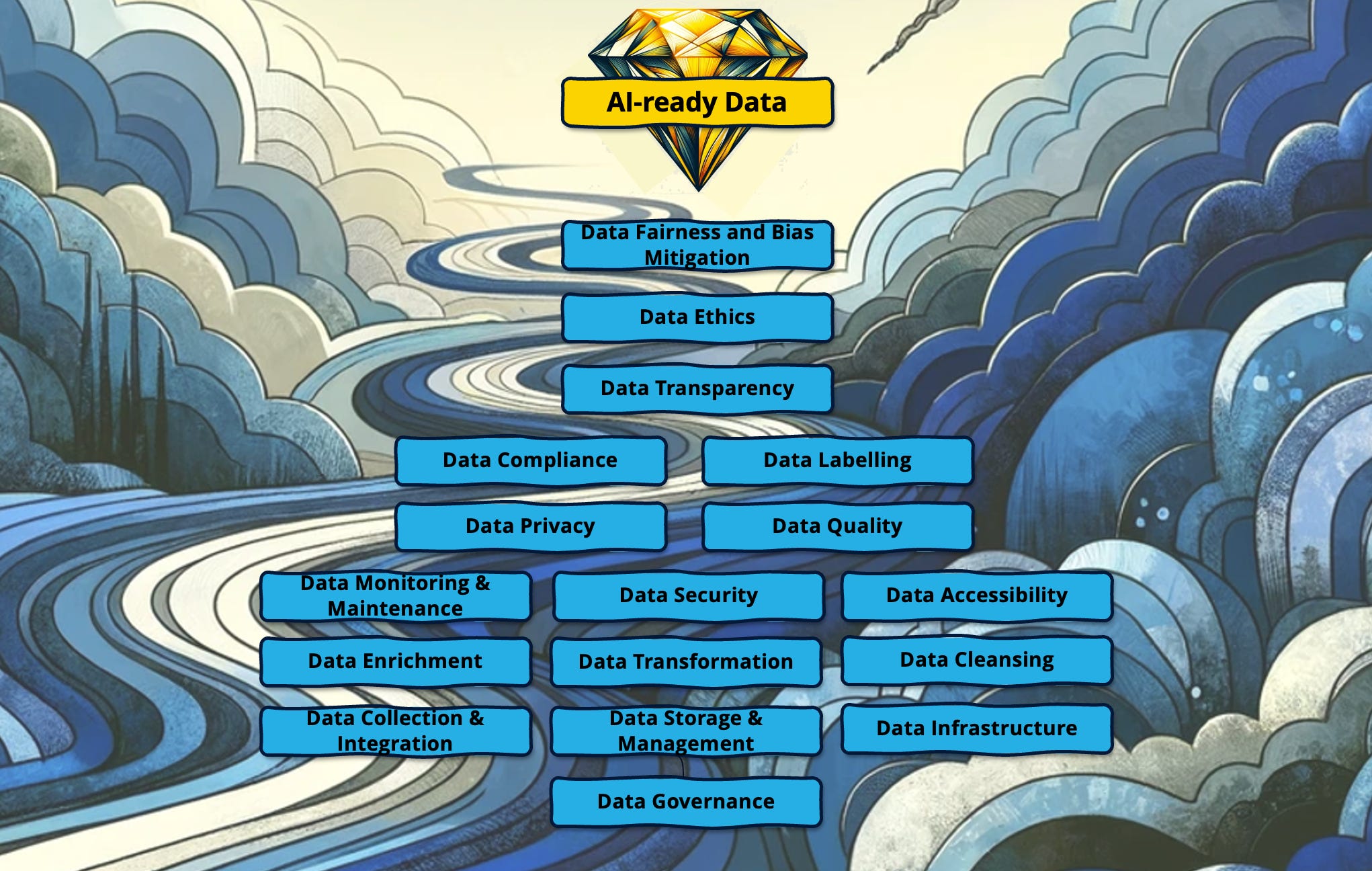

A pathway to AI-ready data

Hard work lies ahead, yet it’s not too late to drive meaningful progress.

Let’s now explore some crucial areas that must be addressed to prepare — your data — for the “AI-revolution”.

Data Governance

Data governance establishes the structure for managing data, ensuring compliance, integrity, and usability within an organization.

Key Features of Data Governance:

It outlines clear policies, processes, and standards for data management.

It designates specific roles for proper data handling and stewardship.

Successful data governance involves:

Engaging business users to take data ownership, which is crucial but challenging.

Educating on the value of quality data and its organizational benefits.

Encouraging adherence to data standards through rewards and gamification.

Defining and assigning clear data stewardship roles, emphasizing collaboration between business and IT.

Creating feedback mechanisms for users to report data issues and aid continual improvement.

Providing accessible tools and platforms, like Business Glossaries and Data Catalogues, for effective data management and understanding.

Data governance is essential for AI, ensuring the accuracy and reliability of data and AI models, fostering responsible AI growth, and maintaining trust in AI systems.

Data Collection and Integration

For machine learning and AI, the quality of input data is crucial to the output quality.

Key Points for Effective Data Use in AI:

Diverse, extensive datasets are vital for AI to learn and perform well.

Integrating various data sources provides a complete, insightful view for AI analysis.

Real-time data feeds enable dynamic and context-aware AI applications.

Efficient data integration accelerates AI deployment and innovation.

Quality and integrated data collection are essential for the optimal performance of AI in complex tasks.

Data Storage and Management

Effective data infrastructure is vital for AI success, where storage and management are pivotal. Here's a concise breakdown of this premise:

Scalability: AI, especially generative models, demands expandable storage to sustain growth and performance.

Speed: AI's efficiency hinges on rapid data access for swift processing and real-time insights.

Lifecycle Management: Proper data management adjusts storage based on evolving data, ensuring compliance.

Interoperability: Diverse AI data sources need storage systems that can handle various data types cohesively.

Cost-Efficiency: Affordable storage is essential to house growing data volumes without financial strain.

Such streamlined infrastructure supports AI's demanding requirements, underpinning advanced applications and innovations.

Data Infrastructure

Key features of a modern AI-ready data infrastructure can be summarized as follows:

Adaptable Architecture: To keep pace with evolving AI, infrastructure must be flexible.

Centralised Data Storage: Data lakes and warehouses aggregate vast data for AI use.

Computing Strength: AI often needs high processing power for complex tasks.

Strong Networking: Ensures rapid data transfer for real-time AI analytics.

Data Orchestration: Manages data flow for timely, format-correct AI processing.

Resource Elasticity: Dynamically scales resources for AI demand fluctuations.

Energy-Smart Design: Reduces costs and promotes sustainability in AI operations.

Such infrastructure not only enables AI but also future-proofs for evolving data use.

Data Enrichment

Data enrichment enhances AI by adding context, improving decision accuracy across machine learning and generative models:

Depth and Context: Adds nuance for AI to detect data subtleties.

Predictive Power: Increases prediction precision.

Completeness: Addresses data gaps for a fuller data foundation.

Quality Insights: Broadens the dataset for superior insights.

Practicality: Grounds AI applications in real-world relevance.

Competitive Edge: Reveals hidden correlations for strategic advantages.

Cross-Domain Use: Fuses diverse data for comprehensive analysis.

User Experience: Enables generative AI to produce user-centric results.

Bias Mitigation: Diversifies data to promote fairness in AI models.

Data Transformation

Data transformation refines raw data for AI consumption, crucial for machine learning, deep learning, and generative AI. Here’s its practical significance:

Compatibility: Adjusts data to fit AI model requirements for smooth processing.

Feature Engineering: Creates informative attributes to boost AI algorithm performance.

Normalisation: Scales data uniformly for consistent, fair AI analysis.

Dimensionality Reduction: Simplifies data, enhancing AI accuracy and speed.

Data Conversion: Translates categorical/textual data to numerical for AI use.

Time Series Analysis: Structures data temporally for precise trend predictions.

Efficient Learning: Enables AI models to learn faster and perform better.

Data transformation optimises raw data to empower AI, ensuring it’s fit for purpose.

Data Cleansing

Data cleaning is crucial for AI performance and reliability, with continuous benefits:

Enhances Accuracy: Clean data ensures AI models learn from accurate information, boosting their precision.

Minimises Noise: It filters out irrelevant details, sharpening the focus of AI learning.

Reduces Bias: Proper cleaning helps eliminate biases, promoting fair AI results.

Increases Efficiency: AI processes clean data faster, using fewer resources.

Boosts Predictive Power: The precision of clean data enhances AI predictive analytics.

Supports Decision-Making: Outputs from clean data-based AI aid in confident decision-making.

Guarantees Reliability: Reliability in AI outputs stems from consistent data cleanliness.

Data Monitoring and Maintenance

Effective data monitoring and maintenance ensures that the foundation upon which AI models are built remains solid and reliable.

Leverage Automated Tools: Utilize AI-driven tools for constant data surveillance and immediate issue resolution.

Set Maintenance Schedules: Establish and adhere to a regular schedule for data review and cleanup to maintain its quality.

Involve Cross-functional Teams: Encourage collaboration between IT, data scientists, and business units to ensure data remains aligned with organizational needs.

Data Accessibility

Data accessibility ensures that authorized users and systems can readily use data when needed, essential for integrating AI into business processes.

Key Aspects:

Easy Access: Systems for simple data retrieval with robust security.

Interoperability: Data sharing across different applications and departments.

Standard Formats: Data in universal formats for AI algorithm compatibility.

Importance:

AI Training: Accessible data is vital for training accurate machine learning models.

Generative AI: Broad data access enables nuanced outputs from generative AI.

Efficiency: Quick data access speeds up decision-making, boosting efficiency.

Accessible data is the backbone of AI-driven innovation and operational agility.

Data Security

Securing datasets is crucial in AI-integrated business operations. Essential security measures include:

End-to-End Encryption: Safeguard data from collection to storage to prevent unauthorized access and preserve data integrity for AI use.

Access Controls: Implement strict permissions to reduce the risk of internal breaches affecting AI systems.

Security Updates: Continually strengthen defenses by updating security protocols to match AI advancements.

Vulnerability Assessments: Identify and fix system weaknesses to maintain secure data for AI models.

API Security: Protect API endpoints to prevent data leaks and ensure safe data inputs for AI.

Audit Trails: Maintain logs for data interactions to monitor security incidents and aid in investigations.

SDLC Integration: Incorporate security from the beginning to avoid vulnerabilities in AI model development.

Stakeholder Education: Train on data security importance, best practices, and threat recognition.

Adopting these practices not only safeguards data but also builds trust in AI-generated content, influencing brand reputation and public perception.

Data Privacy

Data privacy is fundamental to customer trust in AI, requiring careful management. Key privacy practices for AI include:

Minimizing Data Collection: Limit data to what’s essential, reducing privacy risks and aiding compliance.

Consent Management: Obtain clear consent for personal data use and provide simple opt-out options.

Anonymisation: De-identify data pre-AI application to protect individual identities, especially in consumer AI services.

Privacy by Design: Integrate privacy into AI systems from the start.

Data Minimisation in Training: Use minimal personal data for AI training, considering synthetic data as an alternative.

Privacy Assessments: Regularly evaluate the privacy implications of AI data usage.

Transparent Policies: Clearly articulate AI data usage and privacy safeguards.

Data Subject Rights: Facilitate user rights to data modification or deletion, affecting AI model outputs.

Cross-border Safeguards: Comply with international data transfer regulations.

Vendor Standards: Ensure third-party vendors meet your privacy criteria.

Responsible AI Training: Instruct AI to avoid deducing sensitive or private information.

These measures strengthen consumer trust by prioritizing privacy in AI applications.

Data Quality

Data quality is critical for the accuracy and efficiency of AI algorithms. It guarantees that the data used is precise, complete, and ready for analysis.

Essential Data Quality Aspects:

Accuracy: Error-free data reflecting the real-world ensures reliable decision-making.

Completeness: Full datasets are necessary to prevent skewed AI interpretations.

Consistency: Standardised data allows effective integration and comparison.

Timeliness: Current data is crucial for AI’s relevance and responsiveness.

Data Quality Importance:

Machine Learning Reliability: AI model effectiveness relies heavily on data quality.

Generative AI Fidelity: The authenticity of generative AI outputs is dependent on high-quality data.

Insightful Business Decisions: Quality data leads to precise business insights and competitive edge.

Strategies for Data Quality Maintenance:

Quality at Source: Ensure initial data collection is of high quality.

Frequent Data Reviews: Regular audits help maintain and improve data quality.

Data Quality Software: Utilise smart tools to identify and mitigate data issues.

Data quality is not a one-off task but a continuous process integral to AI’s success.

Data Compliance

Data compliance ensures operations meet legal and ethical standards.

Key Compliance Goals:

Regulatory Adherence: Keep abreast of data protection laws like GDPR and emerging regulations such as the EU AI Act.

Industry Standard Conformity: Align AI operations with sector-specific best practices for compliance.

Compliance Assurance Methods:

Routine Audits: Perform consistent audits to verify compliance with relevant laws.

Training Programs: Educate staff on compliance responsibilities through regular training.

Policy Frameworks: Create clear policies outlining compliance protocols for the organisation.

Data compliance is key for responsible AI deployment, safeguarding legal integrity and building user trust. Prioritising compliance shields your company from legal risks and supports sustainable AI practices.

Data Labelling

Data labeling is assigning descriptive tags to data for AI interpretation, akin to translating with a Rosetta Stone for algorithms.

Data Labelling Essentials:

Precise Tagging: Labels must accurately depict the data to avoid AI errors.

Consistent Standards: Apply uniform labeling to guarantee model dependability.

Detailed Labels: Use granular labels for nuanced AI analysis.

Significance of Data Labeling:

Model Efficacy: Labels are critical for efficient machine learning training.

AI Output Accuracy: AI results rely on the quality of data labeling.

Generative AI Quality: Accurate labels ensure the relevance of generated AI content.

Data Labeling Strategies:

Expert Annotators: Engage domain experts for high-quality labeling.

Automation Tools: Use AI-powered tools for efficient and accurate labeling.

Review Cycles: Regularly refine labels to meet changing AI needs.

Data labelling is an ongoing process essential for the success and reliability of AI models.

Data Transparency

Data transparency is an essential element of trustworthy AI systems. It provides stakeholders with a clear view of how data is sourced, processed, and utilised to make decisions. In the absence of transparency, AI systems lack confidence or credibility. Transparency is the foundation upon which trust is built, and both in our physical as in our digital world, trust is invaluable.

Facets of Data Transparency:

Open Data Lineage: Clearly document the origin, journey, and transformation of data, ensuring that stakeholders can trace its path.

Accessible Decision Logic: Demystify the AI’s decision-making by making the underlying logic accessible and understandable to non-technical users.

Clarity in Data Usage: Communicate openly about the types of data collected, the purpose of its use, and who has access to it.

Building Trust through Transparency:

Engagement with Users: Engage with end-users to provide insights into how their data influences AI-driven outcomes.

Transparent Reporting: Regularly publish transparent reports detailing AI performance, incidents, and improvements.

Education and Communication: Educate all users on the significance of data transparency and the role it plays in ethical AI usage.

Data transparency transforms the AI experience from a black-box to a trusted process.

Data Ethics

Data ethics is the responsible management of information, prioritizing what data should do over what it can do.

Key Aspects of Data Ethics:

Transparency: Be clear about data collection, usage, and purpose, and consider establishing an AI Ethics Board for oversight.

Accuracy: Ensure data quality to avoid AI biases, akin to Airbnb’s approach to diverse data representation.

Fairness: Develop non-discriminatory algorithms, similar to Google’s commitment to auditing AI for bias.

Accountability: Create policies for AI accountability, including an ethical framework and product assessment processes.

Embedding these principles into your AI strategy ensures the technology benefits all stakeholders and upholds ethical standards.

Data Fairness and Bias Mitigation

Data fairness and bias mitigation ensure AI decisions are impartial and equitable.

For Data Fairness:

Use diverse data reflecting real-world variety for unbiased AI guidance.

Establish protocols to identify and assess biases in data and AI outputs.

Guarantee equitable group representation in training data to prevent bias.

To Mitigate Bias:

Employ algorithms that adjust for identified biases prior to AI training.

Conduct ongoing AI system audits to address emerging biases.

Maintain transparency in AI decision-making to allow fairness evaluations.

The Power of getting the basics right

This years’ Gartner IT Symposium told us that AI is the next big thing for technology leaders. If companies don’t use AI by 2024, they’re really missing out. A sense of FOMO is kicking in again.

Everyone was surprised to hear that only a few companies are ready for AI. But some people at the meeting were smiling — they knew they were ahead because they’ve been working hard on getting their basics right.

Most companies want quick and easy success, but that’s not how it works with AI. It takes time and effort to get it right.

And now, what’s next?

I hope you found the article enjoyable and insightful. Please feel free to use it as a springboard for discussions and planning for a future where your company leads in AI.

The future is shaped by our choices today, one data point at a time.